Data Analysis

Analyzing quantitative data

There are several steps to analyzing quantitative data such as cleaning the data set, coding or re-naming variables, gathering descriptive data, and analyzing correlations. Below are things to consider including in your analysis process, which can differ based on your evaluation design, the data you collected, and what you want to find out from the evaluation. Remember that statistical analyses can sometimes be complicated and challenging if you have not had previous experience analyzing quantitative data. You can always reach out to a statistician or someone with more experience for assistance with this part of your evaluation, such as a student trained in this area, who could assist you in exchange for permission to use the analysis as a paper. You could also consider taking a statistics course at a local college or online to learn how to analyze data using a basic spreadsheet and your own calculations, or by using a statistical software program. If, after reading this chapter, you feel that rigorous program evaluation is beyond the capacities of your project, see if the resources in Chapter 7: Tips and Tools can help you.

Input data

- Before inputting your data, determine who will have access to the data files, when the data will be entered after it has been collected, how the data can be accessed, and where files will be stored in a secure manner. Consider creating a data entry schedule if the data collection process is ongoing or occurs more than one point in time.

- Type data into a spreadsheet such as Excel or into a statistical software program like SAS (http://www.sas.com/en_us/home.html), SPSS (http://www.ibm.com/analytics/us/en/technology/spss/), or EPIiNFO (https://www.cdc.gov/epiinfo/index.html), a free program by the CDC for public health professionals [4].

- Go through and clean your data. Check for any errors (typing, spelling, upper or lower case inconsistencies, etc.), duplicates, missing values, and incomplete, inconsistent or invalid responses [4]. Excel provides tools and instructions to help you clean your data: https://support.office.com/en-us/article/Top-ten-ways-to-clean-your-data-2844b620-677c-47a7-ac3e-c2e157d1db19

- If participation in your assessment is anonymous, make sure the data set does not include any identifiable information. You can create an identification number for each response to keep track and refer back to them when needed.

- Protect or restrict access to the data set to ensure that the information cannot be retrieved by someone who does not have permission or authority to access it. Take special precautions if the evaluation is confidential.

- Back up your data by saving a duplicate version of the data set.

Clarify your objectives and approach

- Think about what you want to find out from the data based on your evaluation objectives and the questions you asked evaluation participants. You may want to meet with stakeholders or partners before analyzing the data to make sure everyone is on the same page.

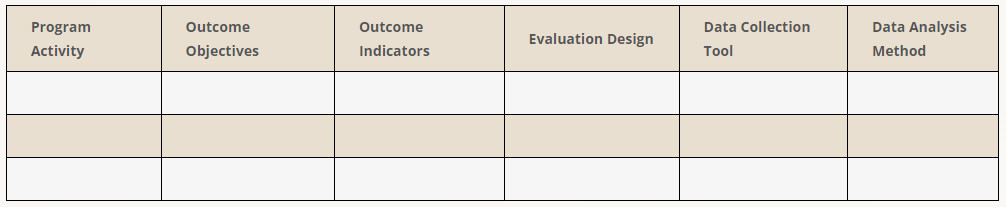

- Look at program activities, outcomes and indicators, the evaluation design, and data collection tools to help you identify what you need to analyze and how. You can use a table such as the one below to help you plan.

- Decide on a consistent way to code and organize the data set. Rename variables or categorize questions and responses based on your evaluation needs.

- Determine whether or not you want to group or dichotomize certain variables. For example you may want to group open-ended responses to “how many individuals live in your household?” into two categories: [1-3 individuals] and [4 or more individuals].

- Decide if you want to include outliers, or unusual and often extreme values that significantly differ from the rest of the data points, for each variable [4]. Outliers can sometimes significantly impact your findings so it is helpful to think about why outliers may have occurred, whether they are accidental, or if they are valid data points that should be included in the analysis.

Gather descriptive data

- Explore demographic characteristics of evaluation participants. Start by identifying what you want to find out, such as:

- Is the sample diverse in age, gender, and socioeconomic status?

- Does the sample consist of mostly one gender or individuals from one geographic location?

- If your evaluation design included a control group you can compare demographic data between groups to find out if they are equivalent in terms of the characteristics you collected data on.

- When applicable, look at the evaluation participants’ pre- and post-scores. Start by clarifying what you want to find out. For example you may want to ask:

- How were the scores distributed?

- Did scores improve over time?

- Did program participants perform better than non-participants in the post-test?

- Look for data on different measures such as the mean, median, mode, percentage, distribution, and dispersion. Here’s what each of the measures can tell you [5]:

- Mean: Provides the average value. This is the sum of all the values divided by number of values.

- Median: Tells you the middle value in the whole set of values.

- Mode: This is the value that occurs most frequently.

- Percentage: Can tell you what percentage of participants reached a target value, or hit higher or below a certain value.

- Distribution: Can tell you the frequency or range of values for a certain variable. For example you can divide food safety knowledge scores into different categories (0-25%, 35-50%, 50-75%, and 75-100%) to find out what percent of participants scored within that range of scores.

- Dispersion – range, standard deviation, and variance: Tells you how the values are spread around the mean. There are three ways to look at the dispersion:

- Range: The lowest value subtracted by the highest value.

- Standard deviation: Takes into account how outliers affect the spread of values. To calculate you take the square root of the variance (see below).

- Variance: Takes into account how outliers affect the spread of values. To calculate: 1. Find the mean 2. Subtract the mean from each value and square the result 3. Find the sum of all the squared differences 4. Divide the sum by number of values.

- Find out if the data has a normal distribution. In a normal distribution the mean, median, and mode all equal 0, the standard deviation is 1, and 50% of the values are less than the mean and 50% are more than the mean.

- If your data is normally distributed, find confidence intervals to know how likely it is that true population results would be within the range of values you have found by confidence limits [4]. For example a 95% confidence interval can tell you that you are 95% confident that the true population mean is between x standard deviations from the mean.

- Link and compare data [4]. Examples of ways you could link different data sets include:

- Linking control and comparison group data to find any differences in the outcome variable.

- Linking factors such as program participation or exposure to food safety messages with data on the outcome variable to find out how different factors influence the results.

- Comparing microbial samples to self-reported data on food handling to explore validity of data collected.

Examine change and association

- Measure differences between pre- and post-test results and find out whether the outcome variable increased, decreased, or stayed the same. Keep in mind that if your outcome variable was high when collecting baseline or pre-test data it will be difficult to measure and notice any change.

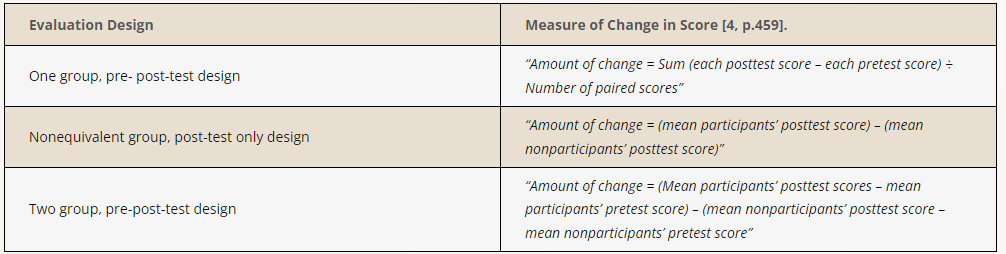

The table below explains how to calculate a change in score (e.g. knowledge test or household audit score) for different evaluation designs [4].

- Look at associations or correlations. Remember that you can only assess a causal relationship if you used an experimental design [4]. Find out whether there is a positive or negative association or correlation between your program and the outcome variable, or if it is a null outcome, which would occur if your program shows no effect on a particular variable.

- Find out how different characteristics or demographic factors influence the outcome variable.

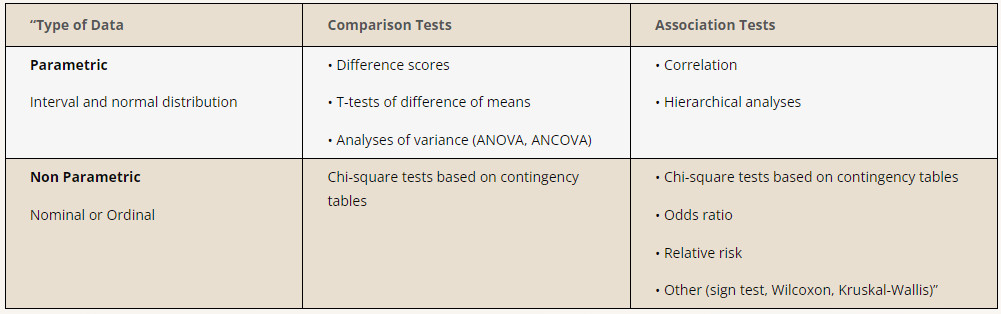

- When making comparisons and examining associations, different statistical tests are needed depending on whether you are using parametric or interval data (temperature of refrigerator) or nonparametric or categorical data which can be nominal (gender) or ordinal (rating score of workshop: 1. poor 2. satisfactory 3.good 4. excellent).

The table below shows commonly used parametric and nonparametric statistical tests for comparison or association tests [4, p.471]:

- Explore the statistical significance of your findings to find out the probability of an observed result occurring by chance [4]. Statistical significance is usually described as p values of <0.05 or <0.01 to show that there is a 5 % or 1% possibility of an observed finding occurred by chance.

- Explore what moderating or mediating variables may have influenced the outcome data when examining associations [4]. A moderating variable is a variable that influences the strength or direction of the relationship between the independent and outcome variable [4]. For example, a past experience of food poisoning can be a moderating variable if its occurrence results in a stronger association between participating in a food safety workshop and reports of safe food handling practices.A mediating variable is a variable that is part of the causal pathway between the independent and outcome variable [4]. For example, possessing a thermometer at home can be a mediating variable between learning about safe cooking of meats and using a thermometer to check the internal temperature of cooked meat, poultry, and egg dishes. Owning a thermometer is a mediator in this case because a person cannot use a thermometer without owning one.

Explore other factors

- Analyze attrition to find out how loss to follow up might have affected or biased your findings. An attrition estimate can be calculated by dividing the number of individuals at follow up by the number of individuals at baseline (# of evaluation participants at follow up ÷ # of evaluation participants at baseline). This should be less than 10%, and if it is greater than 10% you can run a logistic regression model to examine if attrition is related to any demographic factors [1].

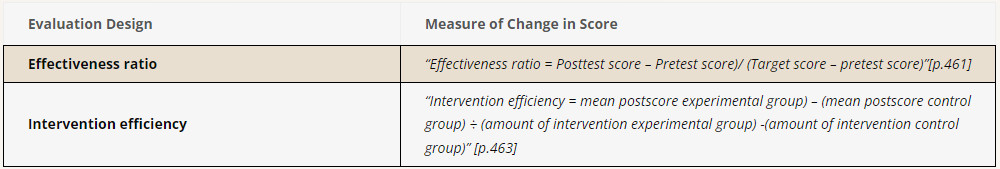

- Explore change related to a target outcome (effectiveness) or related to intervention effort (efficiency).

You can test the effectiveness and efficiency of the program using the calculations below [4]:

An evaluation of a Fight BAC! food safety campaign targeted to an urban Latino population in Connecticut examined campaign coverage, consumer satisfaction, and influence on food safety knowledge, attitudes, and behaviors. To collect evaluation data, a cross-sectional pre- and post-test survey was provided to 500 Latino consumers. Analysis of the data was conducted using SPSS, and categorical responses with more than two categories, were recoded into only two categories. For example when coding data, response options “hardly ever” and sometimes” were put in one category and “frequently” and always” in another. Analyses showed that recognition of the campaign logo increased from 10% to 42% (P<.001). Participants exposed to campaign messages were more likely to have an “adequate” food safety knowledge score and were more likely to self-report proper procedures for defrosting meat (14% vs 7%; P = .01). In addition, a dose-response relationship was found between exposure to the campaign and awareness of the term “cross-contamination.” Out of four media sources (radio, television, newspaper/magazine, and poster) television and radio had the highest levels of exposure.

An evaluation of a Fight BAC! food safety campaign targeted to an urban Latino population in Connecticut examined campaign coverage, consumer satisfaction, and influence on food safety knowledge, attitudes, and behaviors. To collect evaluation data, a cross-sectional pre- and post-test survey was provided to 500 Latino consumers. Analysis of the data was conducted using SPSS, and categorical responses with more than two categories, were recoded into only two categories. For example when coding data, response options “hardly ever” and sometimes” were put in one category and “frequently” and always” in another. Analyses showed that recognition of the campaign logo increased from 10% to 42% (P<.001). Participants exposed to campaign messages were more likely to have an “adequate” food safety knowledge score and were more likely to self-report proper procedures for defrosting meat (14% vs 7%; P = .01). In addition, a dose-response relationship was found between exposure to the campaign and awareness of the term “cross-contamination.” Out of four media sources (radio, television, newspaper/magazine, and poster) television and radio had the highest levels of exposure.

Dharod, J. M., Perez-Escamilla, R., Bermudez-Millan, A., Segura-Perez, S., & Damio, G. (2004). Influence of the fight BAC! Food safety campaign on an urban latino population in Connecticut. Journal of Nutrition Education Behavior, 36.

Analyzing qualitative data

There are several steps to analyzing qualitative data which can differ depending on the data collected and your evaluation needs. Below are steps often included in a qualitative analysis [adapted 4]:

- Transcribe audio recorded or videotaped evaluation data, when applicable. You can transcribe responses into a Word document, spreadsheet, or use a software program such as Atlas.ti (http://atlasti.com/)

- If participation in the evaluation is anonymous, do not include any identifiable information in the transcription. You can create an identification number for each evaluation response instead of using names of the participants.

- Decide if you want to have a starting list of relevant and important themes to look for in the data transcripts. For example, you may have conducted some background research and found that financial constraints can significantly influence food safety practices, so you might want to include financial constraints as a theme to look for in the data.

- With qualitative data, reliability is often confirmed through multiple analyses of interview transcripts by more than one researcher [2]. Consider finding another researcher or staff member to independently review the transcripts and identify themes.

- Conduct a thematic analysis by reading through the entire transcript and identifying important reoccurring patterns and themes.

- Following the initial review of transcripts and identification of themes, researchers should meet with each other to review findings and come to an agreement on themes that are meaningful and important.

- Categorize identified themes and patterns. Share the identified themes with stakeholders and partners to identify priority themes and to refine and confirm final themes that will be used in the analysis. You may want to include subthemes within each theme.

- Consider re-organizing data under final themes and subthemes. You may want to go through the transcript to mark and code the data based on the each theme or subtheme.

- Develop a narrative of the research findings and create a report that discusses final themes. Consider including direct quote examples and descriptions of the importance and implications of each theme.

- Interpret identified themes and subthemes to form conclusions about your program.

Share your findings

- Use color-coded graphs and tables to share findings, especially for quantitative data. Excel and other data analysis programs usually offer tools to help you translate data into tables, charts, and graphs.

- Determine what parts of your analysis are most relevant or important to share and include in a final report. You may want to create multiple reports for different stakeholders. For example, you may want to have slightly different versions for funders, partners, and community members depending on what is most relevant to them and what they want to know.

- Strengthen your evaluation report by using mixed methods to share findings. Provide quantitative findings that are supplemented by qualitative personal narratives that offer further insight into participants’ perceptions and behaviors and their responses. Use direct quotes to tell a story about how your program impacted participants.

- Facilitate a discussion about your findings with other staff, partners, and stakeholders. Reflect on what parts of the program worked, what didn’t, and how evaluation data can be used to improve the program. Use evaluation findings to answer questions such as:

- Can any changes be made to better address the needs of the target audience and any barriers it is facing related to food safety?

- Do any education strategies and methods need to be modified for the program to be more effective in influencing food safety knowledge, attitudes, skills, aspirations, and behavior?

- Do program staff or volunteers require additional training or support in a particular area?

- Are there any additional resources we could use to maximize our impact?

- Does additional evaluation or research need to be conducted to formulate better conclusions about the program?

- Publish your findings and share what you have learned with other educators and decision makers, when applicable. There are current research gaps in consumer food safety education research making it important that new insights and learnings are shared and published, such as through peer-reviewed journals [3]. Examples of publications you could submit articles to include:

- Journal of Food Science Education: http://www.ift.org/knowledge-center/read-ift-publications/journal-of-food-science-education.aspx

- Journal of Nutrition Education and Behavior: http://www.jneb.org/

- Journal of Extension: http://www.joe.org/

- Food Protection Tends: https://www.foodprotection.org/publications/food-protection-trends/

- Health Education Journal: http://hej.sagepub.com/

- Health Education & Behavior: http://heb.sagepub.com/

- Journal of Food Protection: https://www.foodprotection.org/publications/journal-of-food-protection/

- Remember that it is not only important to share what strategies work, but also what strategies don’t work. By sharing failures and challenges in addition to strengths and successes, other educators can learn about best practices and what methods to avoid. It is also valuable to share any validated instruments or resources you have developed for your program so that other educators and researchers do not have to reinvent the wheel when useful and applicable tools already exist.

- Be creative when it comes to sharing your findings and what you learned from the evaluation. In addition to submitting an article to a peer reviewed journal you can:

- Post evaluation data on your website or blog and link to it through social media channels such as Twitter or Facebook.

- Create a PowerPoint presentation of findings to share with local educators at a workshop event.

- Host and plan a Webinar to share your findings with individuals who may not be able to attend an in person event.

- Submit conference abstracts to present your research at food safety, public health, or education conferences.

In Summary,

when thinking about data analysis and how to apply what you learned in this chapter to your program you may want to ask:

- How will I track and store evaluation data?

- What are the objectives of my data analysis? What do I need to find out?

- How will I analyze the data?

- How will data be reported and shared with stakeholders?

References

- Cates, S., Blitstein, J., Hersey, J., Kosa, K., Flicker, L., Morgan, K., & Bell, L. (2014). Addressing the challenges of conducting effective supplemental nutrition assistance program education (SNAP-Ed) evaluations: a step-by-step guide. Prepared by Altarum Institute and RTI International for the U.S. Department of Agriculture, Food and Nutrition Service. Retrieved from: http://www.fns.usda.gov/sites/default/files/SNAPEDWaveII_Guide.pdf

- Creswell, J. W. (2007). Qualitative inquiry and research design (2nd Ed.) Thousand Oaks, CA: Sage Publications.

Food and Drug Administration (FDA). White Paper on Consumer Research and Food Safety Education. (DRAFT). - Issel L. (2004). Health Program Planning and Evaluation: A Practical, Systematic Approach for Community Health. London: Jones and Bartlett Publishers.

- Trochim, W. M. K. (2006). Analysis. Retrieved from: http://www.socialresearchmethods.net/kb/analysis.php