Mapping the Intervention and Evaluation

Steps for a program evaluation

There are six evaluation steps to think about as you plan your intervention and evaluation. The evaluation steps are [6]:

- “Engage stakeholders

- Describe the program

- Focus the evaluation design

- Gather credible evidence

- Justify conclusions

- Ensure use and share lessons learned”

The next chapters will go through these steps in more detail, but it is helpful to have a framework or overview to think about before you begin planning. Applying the Utility, Feasibility, Accuracy, and Propriety evaluation standards discussed in the first chapter to these steps can help ensure that your evaluation is rigorous and thorough.

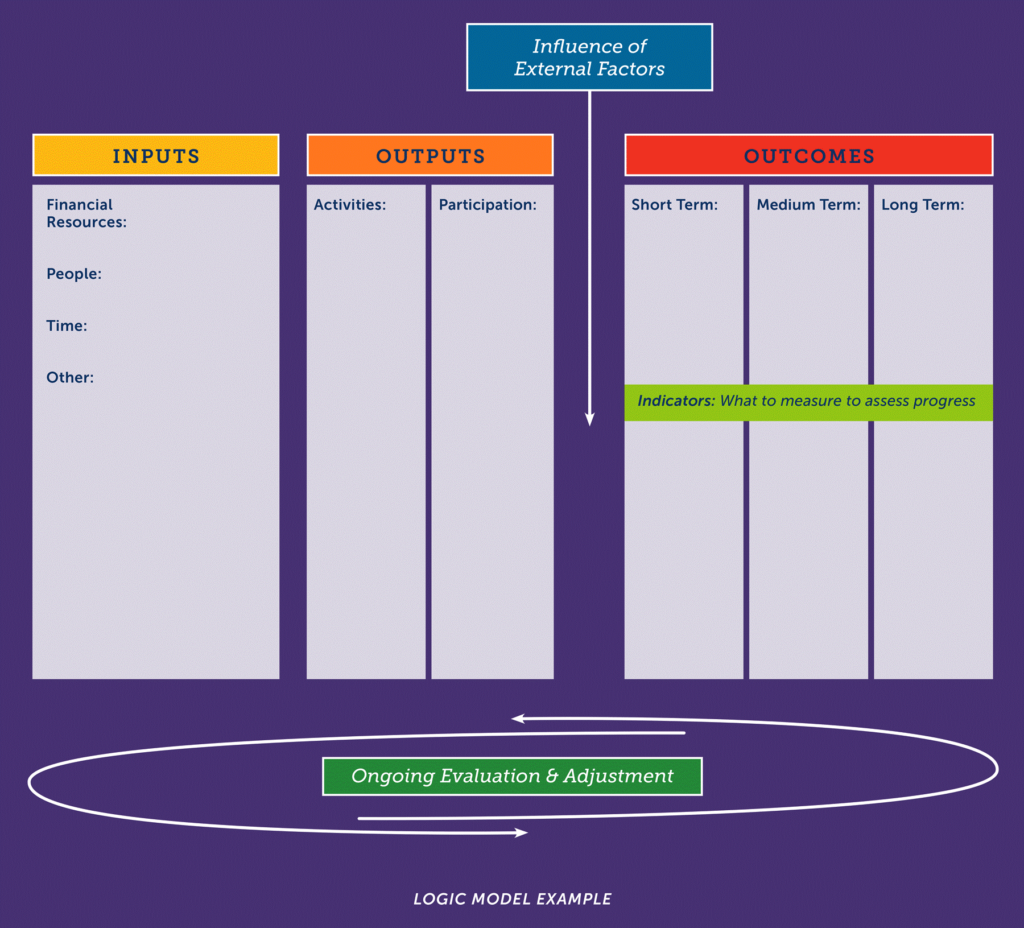

Create a logic model

Creating a logic model can help you describe your program by identifying program priorities, mapping out the components of your program, and understanding how they are linked. A logic model can also help you identify short-, medium-, and long-term outcomes of the program and figure out what to evaluate and when. A logic model can also help program staff, stakeholders, and everyone else involved with implementation and evaluation and to understand the overall framework and strategy for the program.

A logic model generally includes inputs (e.g. resources, materials, and staff support), outputs (e.g. activities and participation), and outcomes (short, medium, and long term). As you pinpoint program outcomes you should also identify corresponding indicators. An indicator is the factor or characteristic you need to measure to know how well you are achieving your outcome objectives. Identifying outcome indicators early in the planning process can help clarify program priorities and expectations.

There is no one way to create a logic model. You may decide to make one model for your entire program, or multiple models for each program activity. Make it your own, and remember to take into account the needs of your target audience, the program setting, and your resources.

Below is an example of a logic model created for a program aiming to reduce foodborne illness due to cross contamination of foods – [adapted 11,12]. You can create your own logic model using the template provided in Chapter 7.

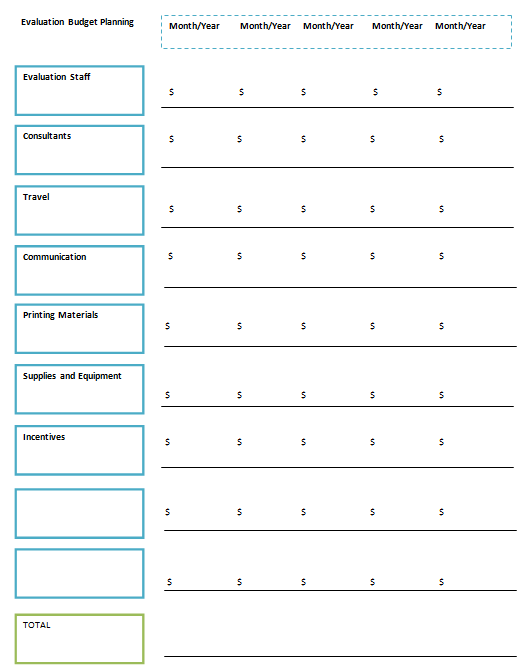

Budget

When planning and deciding your program budget make sure you take evaluation costs into account. The general recommendation is to use 10% of program funding for evaluation [13]. Your budget can be flexible and you should review it over time to adjust if needed [14]. Document your spending and keep track of expenses. You should also track and take inventory of program supplies and materials so that you don’t purchase more than needed and can reduce waste and maximize efficiency. If possible, you may find it helpful to designate a team member to keep track of spending and funds.

Use the template in Chapter 7 to help you plan your budget and keep track of spending [adapted 14].

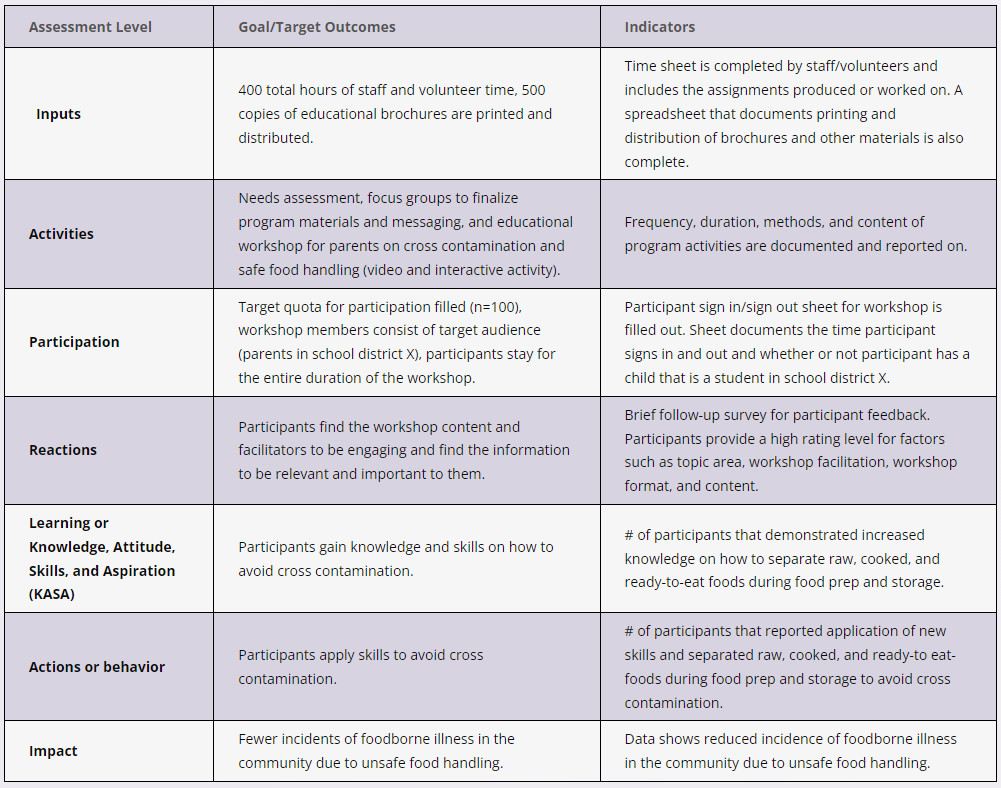

Levels of assessment

There are generally seven incremental assessment levels in a thorough program evaluation that you should consider including in your evaluation, depending on your resources and evaluation needs [5,10]:

- Program inputs and resources. These include staff and volunteer time, monetary resources, transportation, and program supplies required to plan, implement, and evaluate program activities.

- Education and promotion activities for the target audience. Includes activities with direct contact and indirect methods such as mass media campaigns.

- Participant involvement in program activities. Can include frequency, duration, and intensity of participation or people reached.

- Positive or negative reactions, interest level, and ratings from participations about the program. Can include feedback on program activities, program topics, educational methods, and facilitators.

- Changes or improvements in in Learning or Knowledge, Attitude, Skills, and Aspiration (KASA). These changes can occur as a result of positive reactions to participation in program activities.

- New practice, action, or behavior changes that occur when participants apply new KASA they learned in the program.

- Changes, impact, or benefits from the program to social, economic, and environmental circumstances.

Each level addresses important elements you could evaluate to understand the impact and outcomes of your program, as well program strengths and challenges.

The below table displays examples of outcomes and indicators for each of the seven assessment levels. The examples are based on a program aiming to reduce foodborne illness due to cross contamination of foods.

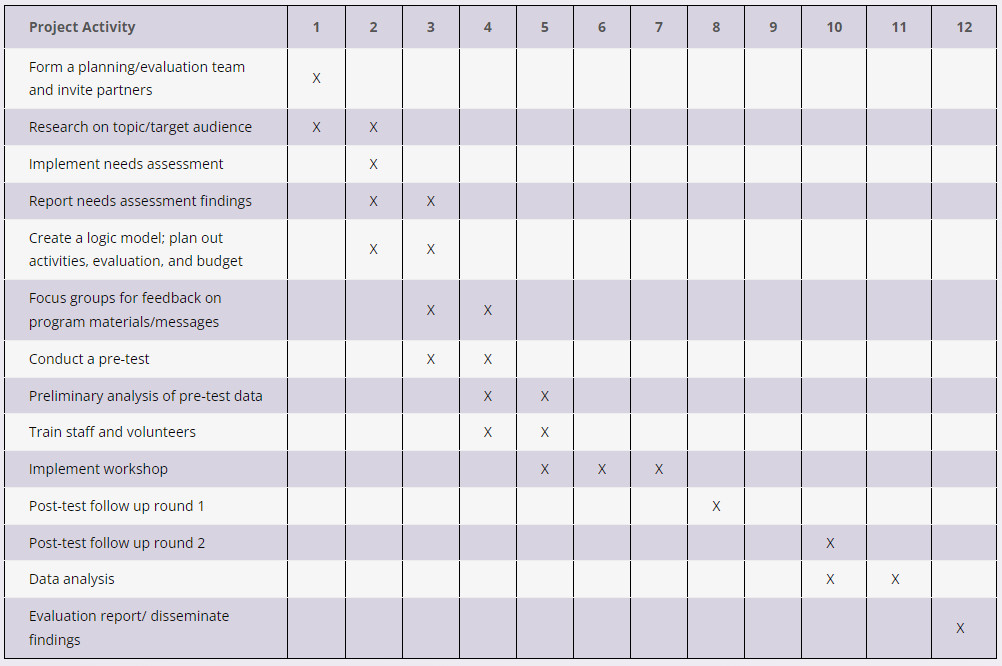

Create a timeline

Create a timeline to display important implementation and evaluation activities and specify when they need to be completed. This timeline can change over time, but it is important for you and other staff and partners to pre-plan and share a common understanding of when important tasks need to be accomplished.

Below is an example of a simple timeline for a year-long project and evaluation, using a Gantt chart. The chart displays important tasks that need to be accomplished, the durations for each task, when they begin and end, and how some tasks overlap with each other.

Gantt Timeline Example – Project Activity and Months

Purpose of the evaluation

As you think about and plan your evaluation, make sure you can clarify the purpose of the evaluation and what kind of information you want to find out. To do this, think about who, including stakeholders and partners, will use the data and how they will use it [2].

Below are examples of questions you might want to ask to help clarify the purpose of the evaluation:

- Do we need to provide evaluation data to funders to show that the benefits of the program outweigh the costs?

- Do we need to understand whether the program strategies used are effective in producing greater knowledge about food safety and positive behavior changes?

- Do we want to figure out whether the educational format and strategies we used can be a successful model for other educators to incorporate in their programs?

Identifying what overarching questions need to be answered, who the evaluation is for and how it will be used, will help you figure out what exactly you need to evaluate and how.

Process evaluation

The first three levels of assessment related to program inputs, activities, and participation are generally referred to as a process evaluation. A process evaluation is usually ongoing and tells you whether program implementation is continuing as planned [9]. It can also help you figure out what activities have the greatest impact given the cost [1]. A process evaluation often consists of measuring outputs such as staff and volunteer time, the number of activities, dosage and reach of activities, participation and attrition of direct and indirect contacts, and program fidelity. A process evaluation can also involve finding out:

- If you are reaching all the participants or members of the target audience [9].

- If materials, activities, and other program components are of good quality [9].

- If all components of the program are being implemented [9].

- Participant satisfaction with the program [3,4,8,9].

Program fidelity refers to whether or not and to what extent the implementation of the program occurs in the manner originally planned. For example, whether or not staff follow standards and guidelines they receive in a training, whether an activity occurs at the pre-determined location and duration, or whether or not educators stick to the designated curriculum when teaching consumer food safety education.

Below are examples of how to assess program fidelity:

- Develop and provide training on program standards for data collection, and management. Check over time to ensure standards are met.

- Evaluate program materials to make sure they are up-to-date and effective.

- Evaluate facilitators or educators to make sure they are up-to-date, knowledgeable, enthusiastic, and effective.

- Hold regular staff trainings and team meetings.

- Track activities (you can use the Activity Tracker Form in Chapter 7) to gather feedback on the implementation of program activities, learn about implementation challenges and how to address them, and provide support to staff, volunteers, and educators.

During the planning phase of your program, it may be helpful to create a spreadsheet template to document program inputs and outputs. Consider providing a form (such as the Activity Tracker Form in Chapter 7) to staff to ensure they keep track of important and relevant information. You should also set up a time or schedule for when staff are required to submit completed forms.

Outcome evaluation

The last few levels of assessment related to changes to KASA, behaviors, or the environment, are generally part of an outcome evaluation. As discussed in the logic model section, you may want to identify three outcomes for your program; short term, medium term, or long term. When determining outcome objectives make sure they are SMART [1,7]:

- Specific – identify exactly what you hope the outcome to be and include the five W’s: who, what, where, when, and why.

- Measurable – quantify the outcome and the amount of change you aim for the program to produce.

- Achievable – be realistic in your projections and take into account assets, resources, and limitations.

- Relevant – make sure your objectives address the needs of the target audience and support the overarching mission of your program or organization.

- Time-bound – provide a specific date by which the desire outcome or change will take place.

Examples of SMART outcomes:

- At least 85% of participants in the Food Safety Workshop will learn at least two new safe practices for cooking and serving food at home by August 2017.

- 80% of food kitchen volunteers will wash their hands before serving meals after completing the final day of the handwashing training on October 2nd, 2017.

- 70% of children enrolled in the Food Safety Is Fun! summer camp will be able to identify the four core practices of safe food handling and explain at least one consequence of foodborne illness by the end of summer.

- By the year 2020 incidents of foodborne illness in the county will decrease by 10%.

Examples of food safety factors you may wish to measure and address in your outcome objectives include: knowledge, attitudes, beliefs, behaviors, and other influential factors such as visual cues or reminders, resources, convenience, usual habits, perceived benefits, taste preferences, self-efficacy, and perceived risk or susceptibility [2].

In Summary,

when thinking about mapping your intervention and evaluation and how to apply what you learned in this chapter to your program you may want to ask:

- What would be included in my logic model? What are my program inputs, outputs (activities/participation), outcomes (short, medium, and long term), and indicators?

- What is my evaluation budget? How will this budget be distributed?

- What is my timeline for program planning, implementation, and evaluation?

- What is the overall purpose of my program evaluation?

- Is a process evaluation feasible?

- If yes – what kind of information do I need to gather in a process evaluation?

- What can I do to ensure program fidelity?

- What are the SMART objectives of my program?

References

- Cates, S., Blitstein, J., Hersey, J., Kosa, K., Flicker, L., Morgan, K., & Bell, L. (2014). Addressing the challenges of conducting effective supplemental nutrition assistance program education (SNAP-Ed) evaluations: a step-by-step guide. Prepared by Altarum Institute and RTI International for the U.S. Department of Agriculture, Food and Nutrition Service. Retrieved from: http://www.fns.usda.gov/sites/default/files/SNAPEDWaveII_Guide.pdf

- Food and Drug Administration (FDA). White Paper on Consumer Research and Food Safety Education. (DRAFT).

- Hawe P, Degeling D, & Hall J. (2003). Evaluating Health Promotion: A Health Workers Guide. Sydney: MacLennan and Petty.

- Issel L. (2004). Health Program Planning and Evaluation: A Practical, Systematic Approach for Community Health. London: Jones and Bartlett Publishers.

- Kluchinski, D. (2014). Evaluation behaviors, skills and needs of cooperative extension agricultural and resource management field faculty and staff in New Jersey. Journal of the NACAA, 7(1).

- KU Work Group for Community Health and Development. (2015). Evaluating programs and initiatives: chapter 36, section 1. A framework for program evaluation: a gateway to tools. Lawrence, KS: University of Kansas. Retrieved from the Community Tool Box: http://ctb.ku.edu/en/table-of-contents/evaluate/evaluation/framework-for-evaluation/main

- Meyer, P. J. (2003). What would you do if you knew you couldn’t fail? Creating S.M.A.R.T. Goals. Attitude is everything: If you want to succeed above and beyond. Meyer Resource Group, Incorporated.

- Nutbeam D. (1998). Evaluating health promotion–progress, problems and solutions. Health Promotion International, 13(1), 27-44.

- O’Connor-Fleming, M. L., Parker, E. A., Higgins, H. C., & Gould, T. (2006) A framework for evaluating health promotion programs. Health Promotion Journal of Australia, 17(1), 61-66.

- Rockwell, K., & Bennett, C. (2004). Targeting outcomes of programs: a hierarchy for targeting outcomes and evaluating their achievement. Faculty Publications: Agricultural Leadership, Education and Communication Department. Paper 48. Retrieved from: http://digitalcommons.unl.edu/aglecfacpub/48/

- USDA and National Institute of Food and Agriculture (NIFA). (n.d). Community nutrition education (CNE) – logic model detail. Retrieved from: https://nifa.usda.gov/resource/community-nutrition-education-cne-logic-model

- USDA and National Institute of Food and Agriculture (NIFA). (n.d). Community nutrition education (CNE) – logic model overview. retrieved from: https://nifa.usda.gov/resource/community-nutrition-education-cne-logic-model

- U.S. Department of Health and Human Services Centers for Disease Control and Prevention (CDC). (2011). Office of the Director, Office of Strategy and Innovation. Introduction to program evaluation for public health programs: A self-study guide. Atlanta, GA: Centers for Disease Control and Prevention. Retrieved from: https://www.cdc.gov/eval/guide/cdcevalmanual.pdf

- W. K. Kellogg Foundation. (2004). W. K. Kellogg foundation education evaluation handbook. MI. Retrieved from: https://www.wkkf.org/resource-directory/resource/2010/w-k-kellogg-foundation-evaluation-handbook