The Toolbox - Quick Tips and Tools - Evaluating an Educational Presentation

For evaluating an educational presentation of consumer food safety information (e.g. workshop, class, training, or Webinar) – when time and resources are limited.

Before Activity/Program

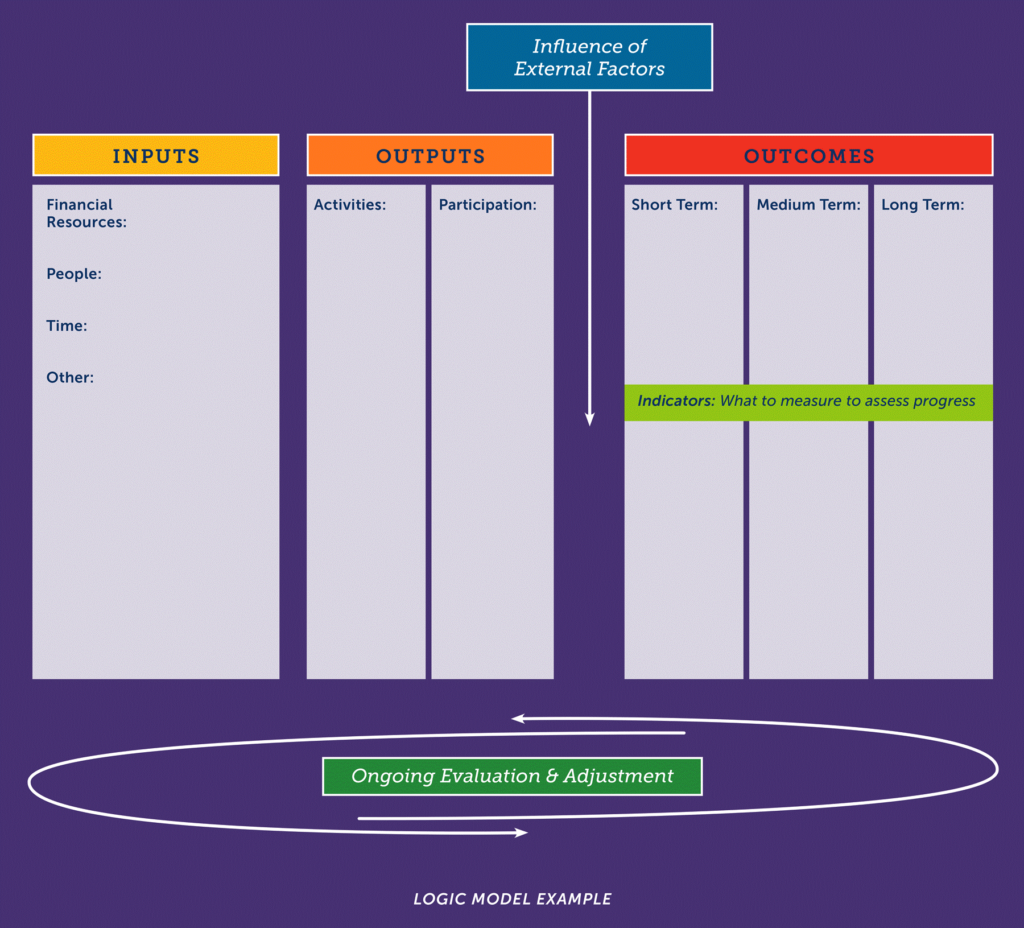

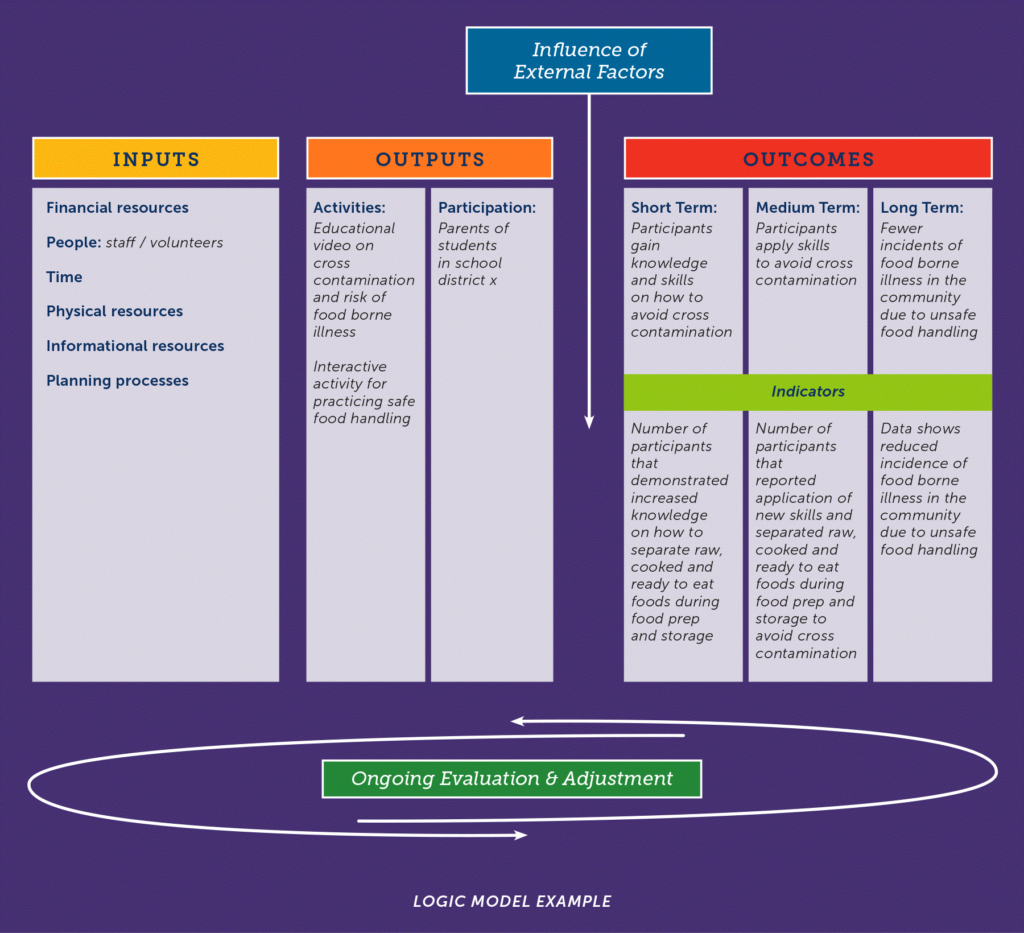

- Clarify the purpose of the evaluation and what you want to find out. Refer back to your program objectives or create a logic model like the one below to identify education goals and outcome indicators.

- Decide on how you want to collect evaluation data such through in-person interviews, questionnaires, online surveys, or focus groups. The format or setting used for your program activities can help you determine the best data collection method to use. For example, if the activity is an in-person workshop, handing out a written questionnaire immediately following the workshop might be your best option. For an online activity such as a Webinar, you may find it best to create an online survey using free programs such as Qualtrics (https://www.qualtrics.com/) or Survey Monkey (https://www.surveymonkey.com/).

- Select your evaluation instrument and think about what questions you want to ask. When possible, use pre-validated instruments such as these. You can also use the Participant Evaluation Form template (download below) and adapt it to fit your needs.

- Remember health literacy and cultural sensitivity. Make sure the questions you want to ask are clear, direct, and easy to understand, and that you take into account reading levels of participants. When in doubt, write at a 7th or 8th grade reading level or below.

- Think about the length of time it takes to complete the survey and keep it brief.

- Pilot test your survey instrument and make sure your test is valid and reliable. Adjust and refine your questions based on the feedback you receive in the pilot test.

- Decide when and how often to collect evaluation data. When possible, conduct a pre- and post-test to collect data before and after the activity. You can also use a multiple time series approach to collect data at least twice before and twice after the activity, to examine trends over time. If only a one time post-test is feasible, consider selecting a few priority questions to ask evaluation participants before the activity as a partial pre-test.

- Create a brief interview script or outline talking points to explain to participants why the evaluation important and how their feedback will be used to improve the program.

- When applicable, apply for IRB approval and create an informed consent form for participants. Make sure the informed consent process is easy for participants to understand. Also, consider assessing and confirming comprehension of the information provided to participants throughout the informed consent process. For example, you could use the “teach-back” method where you ask participants to repeat back to you what they learned.

During Activity/Program

- Provide a sign-in sheet for participants. Consider adding a column to ask participants if they would like to be contacted with any additional information about consumer food safety. You can use this list for the promotion of additional food safety education materials or future activities. If participants show hesitation in providing their personal information in the sign in sheet, make it a voluntary request.

- Conduct a process evaluation. You can use tools such as the Activity Tracker Form (download below) or the Budget Tracker Form (download below).

After Activity/Program

- Thank participants for their time and feedback. Consider providing a small thank-you gift such as a gift card, coupon, or small health or food safety related items like a thermometer or toothbrush. If financial constraints are a barrier, reach out to local businesses to request donations for the incentives.

- Let participants know if they will be receiving any follow-up information with the evaluation findings and follow through with any promises or commitments made to participants.

- Input evaluation data or responses into a spreadsheet such as Excel or into a statistical software program like SAS (http://www.sas.com/en_us/home.html), SPSS (http://www.ibm.com/analytics/us/en/technology/spss/), or EPIiNFO (https://www.cdc.gov/epiinfo/index.html) to analyze the data.

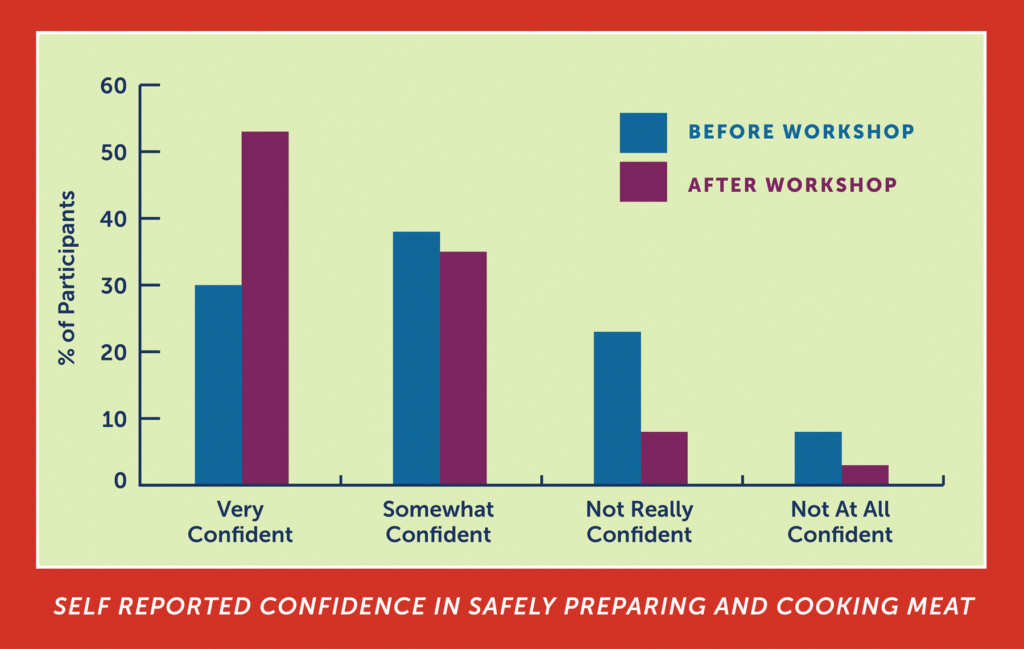

- Use color-coded graphs and tables, such as the one below created on Excel, to share your findings.

- Share what you learned with stakeholders, staff, program participants, or other consumer food safety educators. Reflect on the data to explore how your program might be improved. Discuss questions such as:

- Can any changes be made to better address the needs of the participants?

- Do any education strategies and methods need to be modified for the program to be more effective in reaching education objectives?

- Do program staff or volunteers require additional training, resources, or support in a particular area?